Website data (or 'source data') is often stored and split across multiple sources and database tables. If a website page loads without a cache, many sources might need to be gathered and collated. This can be time consuming and costly for server resource.

A website cache is a way of resolving this issue and compiling the data for the website users before they access it. Data from across multiple sources is gathered, copied and stored in a single file. This file can then provide a buffer and transmits the data to the user. By querying one file, much less resource is spent to load a page.

With the speed advantages that caching gives you, there can be a trade-off between speed and the accuracy of the data shown. If an edit occurs, the previous cached file needs to be refreshed or 'purged'. At this point it will recompile the new data. Sometimes this can frustrate administrators who will need to refresh the cache after making an edit.

Storing of the cache

Caching is commonly stored on either a disk or within the memory (ram). Storing cached files on the disk can be expensive in terms of resource because reading and writing of data is slower. An SSD type hard disk has advantages over its SATA predecessor. SSD drives provide faster read and write access but are more costly as a result.

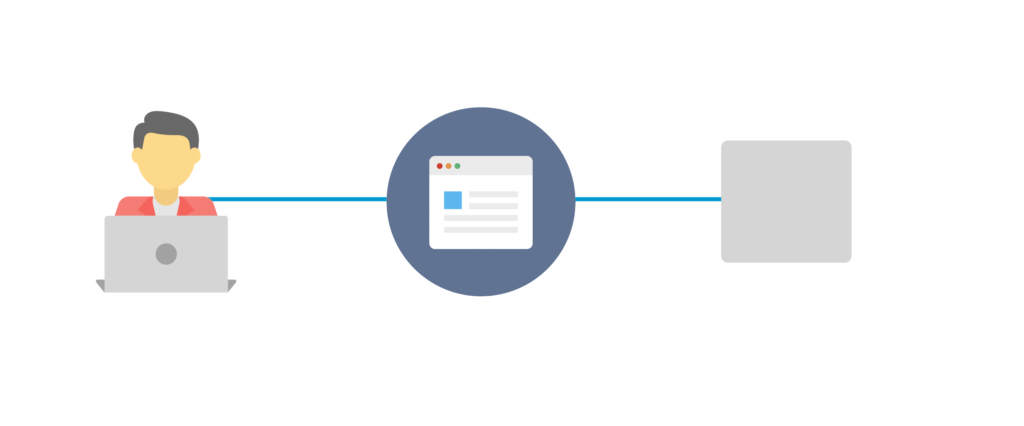

Page load with no cache

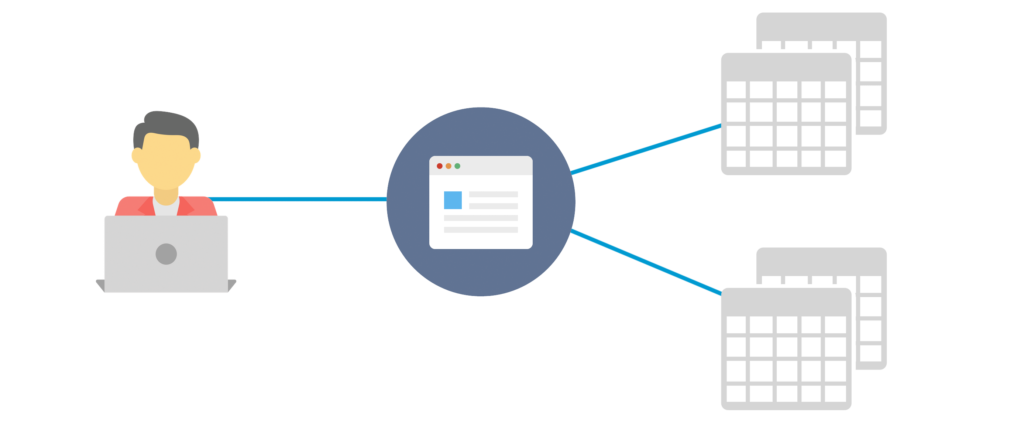

Page load with a cache